What we do

For the past two semesters, I have been an undergraduate researcher at the Interface Ecology Lab. Our lab focuses the way people create, explore, curate, and interact with digital media. To see some of what we do, I suggest checking out IdeaMÂCHÉ.

MICE shows off the the meta-metadata language our lab has created. This language consists of extraction rules for web pages and a number of "wrappers" that utilize those rules to extract metadata from web pages. Each of these wrappers is a part of a type system. We call this entire architecture BigSemantics, and it is open source.

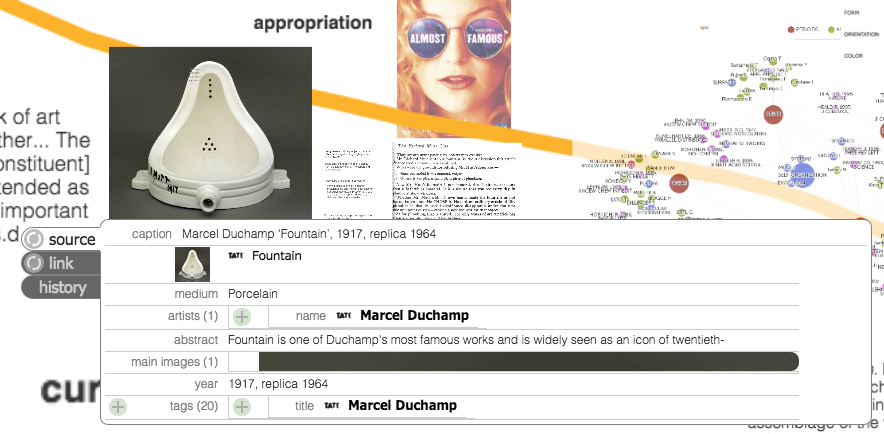

IdeaMÂCHÉ is a tool that allows users to collect, organize, and share text, pictures, and links gathered from the web. Think Pinterest + Photoshop-lite. It is often used as a brainstorming tool or for presentations. It also takes advantage of BigSemantics and MICE, as each piece of web content has metadata associated with it that can be viewed from within a mache (shown in the screenshot below). If you try to view a mache, it will say you need a plugin, but feel free to go ahead. The plugin is only really neccessary if you want to create your own maches.

In this mache, a user has dragged

In this mache, a user has dragged in a photo of Duchamp's 'Fountain'

from the Tate modern website.

What I do

I specifically have worked on a number of things during my time in the lab. I started by authoring wrappers for a number of art museum sites and some commerce sites. After that, I worked on calculating and visualizing usage statistics for IdeaMÂCHÉ using Javascript and D3. This was a part of an internal app that helps us understand the way people use the software.

Currently I am improving the IdeaMÂCHÉ Chrome Extension. I added functionality to extract metadata from pages on the client side, a process that previously required a call to a Java-based service. Doing things client side has a number of advantages, such as being able to see dynamically generated parts of the DOM. In addition, I am building a "Monadic Metadata Viewer" for my Undergraduate Research Thesis. This viewer utilizes BigSementics to visualize networks of linked metadata. The network is shown as physically-based model, where similar nodes are closer together on the screen. For a better idea of what monadic exploration/visualization is check out recent work by Marian Dörk and Bruno Latour.